ChatGPT is here whether we like it or not. Good, bad, or indifferent it is gaining the attention of many educators. Before tackling the likes of its use or implications it has in the classroom, it is good to know what it is, how it works and what limitations/risks are associated with the program.

What is ChatGPT?

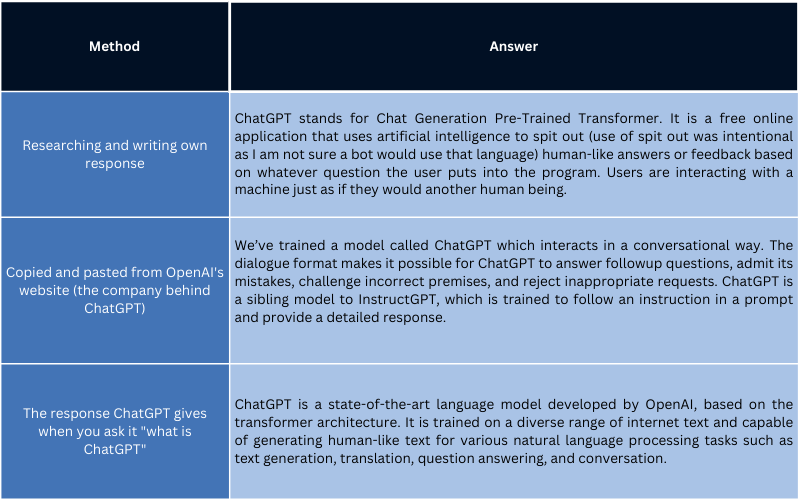

In order to define ChatGPT, I thought it would be neat to do a little exercise allowing you to compare and contrast what students might do in your classroom when it comes to answering a writing prompt like this. I will use 3 different methods for answering this writing prompt: 1) researching and writing my own response; 2) copying and pasting something from a website; and 3) asking ChatGPT for a response. For this exercise the writing prompt is “What is ChatGPT?”

Here are the three definitions via the three methods listed above:

How does it work?

ChatGPT uses algorithms to access and analyze massive amounts of data from the internet and other sources (social media, text from emails, transcripts from customer transcripts from support calls, etc.) to formulate its response, extremely fast. It is also trained based on dialogues and that is why it feels like you are talking to a human.

What can you ask ChatGPT?

Just about anything. It can perform tasks like composing emails, essays, debugging or writing code, explaining things, correcting grammar, writing lesson plans or practice plans, grading or creating assignments based on rubrics, and more.

Here are some examples of things you can ask:

- Write an essay about…

- Create a poem based on…

- Translate…into Greek, Spanish, etc.

- Explain quantum computing in simple terms

- Got any creative ideas for a 10 year olds birthday

- How do I make HTTP request in Javascript

What are some of its limitations/risks?

The program is only as good as the data it has been trained on. Lack of training data and biases in the training data can reflect negatively on the results generated by ChatGPT. It is important to note that ChatGPT:

- can provide wrong answers

- cannot generate responses to situations it has not seen before. Since it is only trained on data through September 2021, it cannot interpret recent data and events.

- is not able to provide personal or emotional support or engage in deep conversations about complex topics.

- can produce harmful instructions or biased content.

- lacks the ability of expression and critical/ethical thinking skills possessed by people.